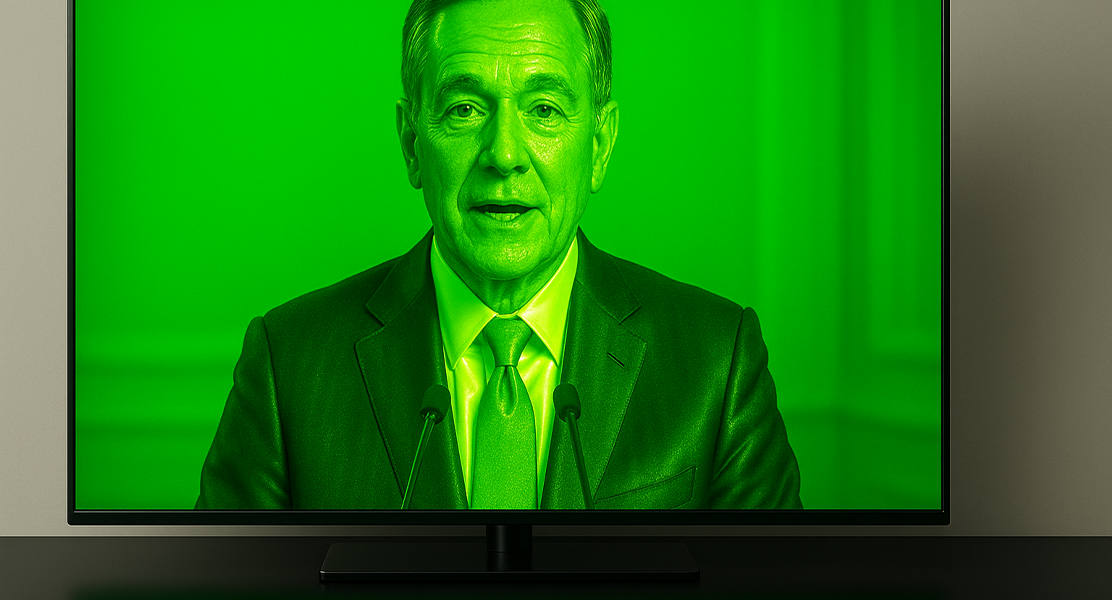

In an era dominated by digital media, truth and fabrication often blur together—and nowhere is this more evident than with deepfakes. Using artificial intelligence and machine learning, deepfakes can convincingly manipulate videos, images, and audio to make people appear to say or do things they never have. The term, derived from “deep learning” and “fake,” has become a symbol of both innovation and deception in the digital age.

How Deepfakes Work

Deepfakes are typically created using generative adversarial networks (GANs)—a system where two AI models compete. One model (the generator) produces fake content, while the other (the discriminator) attempts to detect it. Through thousands of iterations, the system learns to produce increasingly realistic fabrications that can be nearly impossible to distinguish from authentic media.

Modern deepfake tools allow anyone with a computer and basic AI knowledge to swap faces, mimic voices, and even animate still images—all with uncanny realism. These capabilities have advanced so rapidly that even seasoned professionals struggle to verify authenticity without forensic analysis.

Applications: The Good and the Bad

Like most technologies, deepfakes exist on a spectrum from beneficial to malicious.

Positive Uses

- Entertainment & Film: Studios use deepfakes for de-aging actors or resurrecting deceased performers.

- Education & Research: Historical recreations and language-learning simulations benefit from realistic visual storytelling.

- Accessibility: Voice synthesis helps restore speech for people who’ve lost their voices.

Risks & Threats

- Misinformation & Propaganda: Deepfakes can spread false narratives, manipulate elections, or incite unrest.

- Fraud & Impersonation: Synthetic voices and faces have been used in scams costing companies millions.

- Reputation Damage: Individuals can be targeted with fabricated videos or revenge porn, leading to severe personal and professional consequences.

Detection and Defense

The race between deepfake creators and defenders is ongoing. Tech giants and startups alike are developing tools to detect deepfakes through:

- Digital Watermarking: Embedding cryptographic signatures into authentic media at the time of capture.

- AI Detection Models: Training algorithms to identify subtle visual and audio inconsistencies.

- Blockchain Verification: Logging verified content at its source to ensure traceability and authenticity.

Platforms like RealityChek and Content Authenticity Initiative (CAI) are pioneering systems that verify the origin and integrity of digital content—helping restore trust in an increasingly synthetic media landscape.

Regulatory and Ethical Landscape

Governments worldwide are beginning to respond. The European Union’s AI Act and the U.S. Deepfake Accountability Act both aim to regulate malicious use of synthetic media. However, enforcement remains challenging, as deepfake technology evolves faster than most legislative frameworks.

Ethically, society faces a dilemma: How do we preserve freedom of expression while protecting individuals and institutions from digital deception?

Conclusion

Deepfakes represent one of the most powerful—and dangerous—tools in the AI revolution. They showcase human creativity at its peak but also test our ability to discern truth in a world saturated with information. As detection technology and digital verification platforms evolve, the next decade will define how societies balance innovation, security, and authenticity.

The key to combating deepfakes lies not just in technology, but in awareness. Knowing what’s possible is the first step toward protecting reality itself.