Banking fraud has always evolved alongside technology. From stolen checks to phishing emails, criminals have consistently adapted to exploit weaknesses in financial systems. But now, the threat landscape has entered a far more dangerous phase. The emergence of deepfakes—realistic synthetic videos, voices, and images created using artificial intelligence—has opened a new dimension of deception that threatens banks, businesses, and consumers alike.

Traditional fraud relied on social engineering or document forgery. Deepfakes, however, allow criminals to impersonate real people with stunning accuracy. A fraudster no longer needs to guess a password or steal a card; they can simply appear to be the CEO, the compliance officer, or the account holder—visually and vocally.

What Are Deepfakes?

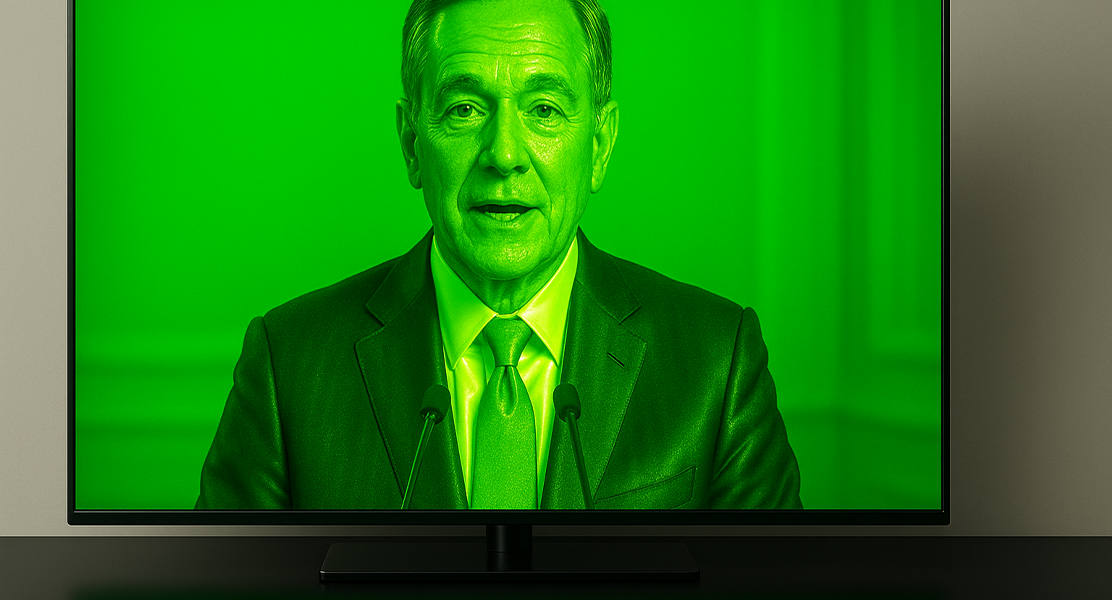

Deepfakes are AI-generated media that convincingly replicate real people’s faces, voices, or gestures. With modern tools, a skilled user can clone a voice using just a few seconds of audio or generate a video of someone speaking sentences they never said. For banks, which rely heavily on trust and identity verification, this is catastrophic.

Imagine a bank manager receiving a video call from their CEO authorizing an urgent wire transfer. The voice, face, and tone are perfect—yet the entire call could be synthetic. This scenario is no longer hypothetical; it has already happened in multiple real-world cases, costing institutions millions.

How Deepfakes Are Making Banking Fraud Worse

Executive Impersonation

One of the most dangerous applications of deepfakes in banking is the impersonation of senior executives. Criminals can now create fake video calls or voice messages that mimic top officials authorizing large payments or transfers. Employees, pressured by authority and time sensitivity, comply before realizing the truth.

Defeating Biometric Security

Banks increasingly use voice and facial recognition to verify clients. Deepfakes can now bypass these systems. AI-generated voices can trick call-center authentication, while synthetic faces can fool liveness checks during digital onboarding. As these technologies improve, the margin of error for fraud detection narrows.

Synthetic Identities and Onboarding

Deepfakes can generate entirely fake customers—complete with realistic IDs, selfies, and video verification clips. Criminals use these synthetic identities to open accounts, launder money, and run phishing campaigns. For banks conducting thousands of remote verifications daily, distinguishing real from fake becomes a monumental challenge.

Consumer-Targeted Scams

Deepfakes aren’t just used against banks; consumers are also victims. Scammers can clone a loved one’s voice asking for money, or pose as a bank representative on a video call. With such lifelike impersonations, even cautious customers can be deceived.

Why Detection Is So Difficult

The sophistication of AI-generated media is increasing faster than the tools designed to detect it. Deepfake technology is now widely available, inexpensive, and easily automated. What once required advanced computer skills can now be done with consumer-grade software.

Most fraud-detection systems were built to flag abnormal transactions or network behavior—not to authenticate human identity. When a fraudster’s deepfake passes every visible and audible test, banks are left relying on behavioral patterns or secondary data signals to detect anomalies.

The Real-World Consequences

The impact of deepfake-enabled fraud extends far beyond financial loss. It erodes public confidence in digital banking, weakens internal trust within organizations, and damages reputations.

- Financial institutions are reporting massive spikes in fraud attempts involving synthetic media.

- Losses in the banking sector from AI-driven fraud are projected to reach tens of billions of dollars annually within a few years.

- Employees are increasingly being targeted in elaborate “CEO fraud” and “payment authorization” schemes where deepfakes replace traditional phishing emails.

These attacks not only drain accounts—they challenge the very notion of digital truth.

How Banks Can Respond

Strengthen Authentication Layers

Reliance on visual or voice confirmation alone is no longer sufficient. Banks must integrate behavioral biometrics, device verification, and cryptographic validation methods to confirm that both the content and the person are authentic.

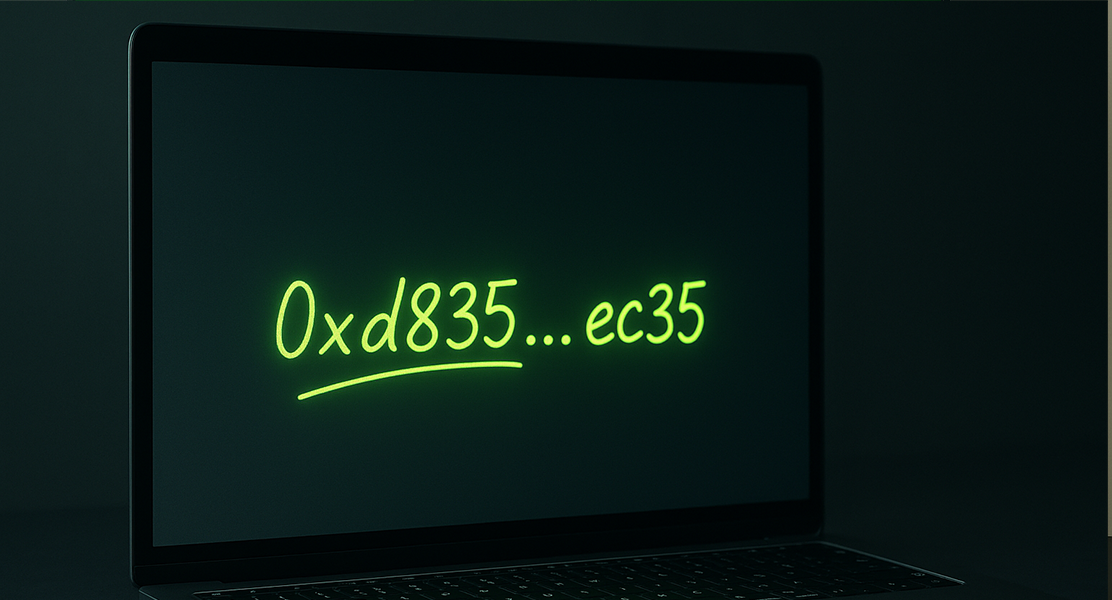

Adopt Cryptographic Signatures

Every communication—whether a transaction request, document, or video—should be verifiable through a digital signature. This ensures the content originates from a legitimate source and hasn’t been tampered with. Such measures could form the backbone of “authenticity verification” systems in future banking.

Enhance Employee Training

Fraud prevention isn’t just technical—it’s cultural. Employees must be trained to verify instructions independently, even from familiar faces or voices. Verifying through separate communication channels can stop most executive impersonation scams before they succeed.

AI Against AI

Financial institutions must use the same AI capabilities that fraudsters exploit. Deepfake-detection models can identify micro-anomalies in speech patterns, facial movements, or data artifacts that human eyes and ears might miss. However, these systems must be continuously updated as deepfake methods evolve.

Regulatory and Industry Cooperation

The fight against AI-driven fraud requires global coordination. Regulators, cybersecurity firms, and financial institutions must share intelligence on emerging threats, detection tools, and incident reports. A unified standard for digital authenticity could eventually form the foundation for secure financial communications.

The Future of Trust in Banking

The rise of deepfakes marks a turning point for the financial sector. Where banks once relied on human trust and personal recognition, they must now rely on cryptographic truth. The industry’s future will depend on verifiable authenticity—not perception.

In the coming years, banking security will evolve from protecting transactions to protecting reality itself. Those who fail to adapt will not only face greater losses—they’ll risk losing the confidence of the very customers their systems were built to protect.

Deepfakes have blurred the line between the real and the artificial. For the banking world, the only way forward is to restore that line—with technology, transparency, and trust as its foundation.